Big data refers to extremely large and diverse collections of structured, unstructured, and semi-structured data that continues to grow exponentially over time. These datasets are so huge and complex in volume, velocity, and variety, that traditional data management systems cannot store, process, and analyze them.

The amount and availability of data is growing rapidly, spurred on by digital technology advancements, such as connectivity, mobility, the Internet of Things (IoT), and artificial intelligence (AI). As data continues to expand and proliferate, new big data tools are emerging to help companies collect, process, and analyze data at the speed needed to gain the most value from it.

Big data describes large and diverse datasets that are huge in volume and also rapidly grow in size over time. Big data is used in machine learning, predictive modeling, and other advanced analytics to solve business problems and make informed decisions.

Big data examples

Data can be a company’s most valuable asset. Using big data to reveal insights can help you understand the areas that affect your business—from market conditions and customer purchasing behaviors to your business processes.

Here are some big data examples that are helping transform organizations across every industry:

- Tracking consumer behavior and shopping habits to deliver hyper-personalized retail product recommendations tailored to individual customers

- Monitoring payment patterns and analyzing them against historical customer activity to detect fraud in real time

- Combining data and information from every stage of an order’s shipment journey with hyperlocal traffic insights to help fleet operators optimize last-mile delivery

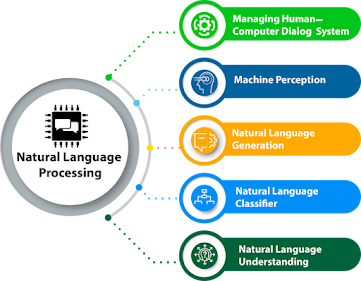

- Using AI-powered technologies like natural language processing to analyze unstructured medical data (such as research reports, clinical notes, and lab results) to gain new insights for improved treatment development and enhanced patient care

- Using image data from cameras and sensors, as well as GPS data, to detect potholes and improve road maintenance in cities

- Analyzing public datasets of satellite imagery and geospatial datasets to visualize, monitor, measure, and predict the social and environmental impacts of supply chain operations

- Veracity: Big data can be messy, noisy, and error-prone, which makes it difficult to control the quality and accuracy of the data. Large datasets can be unwieldy and confusing, while smaller datasets could present an incomplete picture. The higher the veracity of the data, the more trustworthy it is.

- Variability: The meaning of collected data is constantly changing, which can lead to inconsistency over time. These shifts include not only changes in context and interpretation but also data collection methods based on the information that companies want to capture and analyze.

- Value: It’s essential to determine the business value of the data you collect. Big data must contain the right data and then be effectively analyzed in order to yield insights that can help drive decision-making.

- Enhanced decision-making. An organization can glean important insights, risks, patterns or trends from big data. Large data sets are meant to be comprehensive and encompass as much information as the organization needs to make better decisions. Big data insights let business leaders quickly make data-driven decisions that impact their organizations.

- Better customer and market insights. Big data that covers market trends and consumer habits gives an organization the important insights it needs to meet the demands of its intended audiences. Product development decisions, in particular, benefit from this type of insight.

- Cost savings. Big data can be used to pinpoint ways businesses can enhance operational efficiency. For example, analysis of big data on a company's energy use can help it be more efficient.

- Positive social impact. Big data can be used to identify solvable problems, such as improving healthcare or tackling poverty in a certain area.

- Architecture design. Designing a big data architecture focused on an organization's processing capacity is a common challenge for users. Big data systems must be tailored to an organization's particular needs. These types of projects are often do-it-yourself undertakings that require IT and data management teams to piece together a customized set of technologies and tools.

- Skill requirements. Deploying and managing big data systems also requires new skills compared to the ones that database administrators and developers focused on relational software typically possess.

- Costs. Using a managed cloud service can help keep costs under control. However, IT managers still must keep a close eye on cloud computing use to make sure costs don't get out of hand.

- Migration. Migrating on-premises data sets and processing workloads to the cloud can be a complex process.

- Accessibility. Among the main challenges in managing big data systems is making the data accessible to data scientists and analysts, especially in distributed environments that include a mix of different platforms and data stores. To help analysts find relevant data, data management and analytics teams are increasingly building data catalogs that incorporate metadata management and data lineage functions.

- Integration. The process of integrating sets of big data is also complicated, particularly when data variety and velocity are factors.