- Dynamic or frequently changing workloads. Use an easily scalable public cloud for your dynamic workloads, while leaving less volatile, or more sensitive, workloads to a private cloud or on-premises data center.

- Separating critical workloads from less-sensitive workloads. You might store sensitive financial or customer information on your private cloud, and use a public cloud to run the rest of your enterprise applications.

- Big data processing. It’s unlikely that you process big data continuously at a near-constant volume. Instead, you could run some of your big data analytics using highly scalable public cloud resources, while also using a private cloud to ensure data security and keep sensitive big data behind your firewall.

- Moving to the cloud incrementally, at your own pace. Put some of your workloads on a public cloud or on a small-scale private cloud. See what works for your enterprise, and continue expanding your cloud presence as needed. On public clouds, private clouds, or a mixture of the two.

- Temporary processing capacity needs. A hybrid cloud lets you allocate public cloud resources for short-term projects, at a lower cost than if you used your own data center’s IT infrastructure. That way, you don’t overinvest in equipment you’ll need only temporarily.

- Flexibility for the future. No matter how well you plan to meet today’s needs, unless you have a crystal ball, you won’t know how your needs might change next month or next year. A hybrid cloud approach lets you match your actual data management requirements to the public cloud, private cloud, or on-premises resources that are best able to handle them.

- Best of both worlds. Unless you have clear-cut needs fulfilled by only a public cloud solution or only a private cloud solution, why limit your options? Choose a hybrid cloud approach, and you can tap the advantages of both worlds simultaneously.

Monday, 26 August 2024

Hybrid Cloud

Sunday, 18 August 2024

6G Research

6G research is currently developing fast, with academia, industry leaders, and governmental bodies worldwide collaborating to outline the potential standards and capabilities. In November 2023, for example, the International Telecommunication Union (ITU) approved the institution’s 6G vision, and more standardization work is on the way.

As of today, efforts are focused on identifying the pillars that will enable 6G, such as terahertz frequency bands, advanced AI integrations, and novel network architectures, and find solutions for the challenges the new technologies will demand.

Recent advancements include breakthroughs in terahertz communications, which promise to deliver data speeds up to 100 times faster than 5G. Additionally, innovations in energy-efficient network components and AI algorithms for predictive networking are paving the way for a sustainable and intelligent infrastructure.

Experts are also carrying out 6G research in the Reconfigurable Intelligent Surfaces (RIS) field. RIS are expected to direct scattered signals toward a predetermined path, making mobile communications much more efficient.

6G roadmap: Growing from 5G to 6G

There is not yet a detailed roadmap for 6G, but based on several years of research, pre-standardization work is now starting. Research into new technology areas for 6G will then continue in parallel with the evolution of 5G. Learnings from live 5G networks and interactions with the user ecosystems will continuously feed into the research, standardization and development of 6G.

6G will build on the strengths of 5G, but it will also provide entirely new technology solutions. Around 2030 is a reasonable time frame to expect the very first 6G networks to appear.

By that time, society will have been shaped by 5G for 10 years, with lessons having been learned from 5G deployment, and new needs and services appearing. Even with the built-in flexibility of 5G, we will see a need for expanding into new capabilities. This calls for further evolution – following the pull from society’s needs and the push from more advanced technological tools becoming available – that must be addressed for the 6G era when it comes.

5G New Radio (NR) and 5G Core (5GC) evolution is continuing in 3GPP toward 5G Advanced, to ensure the success of 5G systems globally and to expand the usage of 3GPP technology by supporting different use cases and verticals. AI/ML will play an important role in 5G Advanced systems, in addition to other technology components, to provide support for extended reality (XR), reduced capability (RedCap) devices, and network energy efficiency.

6G technologies

Examples of the types of technology that will be needed to deliver 6G use cases include zero-energy sensors and actuators; next-generation AR glasses, contact lenses and haptics; and advanced edge computing and spatial mapping technologies. From our perspective at Ericsson, we have determined that creating the 6G networks of 2030 will require major technological advancements in four key areas: limitless connectivity, trustworthy systems, cognitive networks and network compute fabric.

6G security

High-trust cyber-physical systems connecting humans and intelligent machines require extreme reliability and resilience, precise positioning and sensing, and low-latency communication. This places high demands on 6G security capabilities, but also on its ability to provide assurance that the required capabilities are in place. 6G networks must give this assurance to users and operators – in deployment as well as during operation – in the form of security awareness and resilience. On the personal level, 6G security capabilities must respect privacy and personal data ownership in a connected world. It must be powerful and yet easy to adapt to users’ preferences.

Friday, 16 August 2024

Industrial Internet of Things (IIoT)

The industrial internet of things (IIoT) is the use of smart sensors, actuators and other devices, such as radio frequency identification tags, to enhance manufacturing and industrial processes. These devices are networked together to provide data collection, exchange and analysis. Insights gained from this process aid in more efficiency and reliability. Also known as the industrial internet, IIoT is used in many industries, including manufacturing, energy management, utilities, oil and gas.

IIoT uses the power of smart machines and real-time analytics to take advantage of the data that dumb machines have produced in industrial settings for years. The driving philosophy behind IIoT is that smart machines aren't only better than humans at capturing and analyzing data in real time, but they're also better at communicating important information that can be used to drive business decisions faster and more accurately.

Connected sensors and actuators enable companies to pick up on inefficiencies and problems sooner, saving time and money while also supporting business intelligence efforts. In manufacturing specifically, IIoT has the potential to provide quality control, sustainable and green practices, supply chain traceability and overall supply chain efficiency. In an industrial setting, IIoT is key to processes such as predictive maintenance, enhanced field service, energy management and asset tracking.

What are the security considerations and challenges in adopting the IIoT?

Adoption of the IIoT can revolutionize how industries operate, but there is the challenge of having strategies in place to boost digital transformation efforts while maintaining security amid increased connectivity.

Industries and enterprises that handle operational technologies can be expected to be well-versed in such aspects as worker safety and product quality. However, given that OT is being integrated into the internet, organizations are seeing the introduction of more intelligent and automated machines at work, which in turn invites a slew of new challenges that would require understanding of the IIoT’s inner workings.

With IIoT implementations, three areas need to be focused on: availability, scalability, and security. Availability and scalability may already be second nature to industrial operations, since they could already have been established or in the business for quite some time. Security, however, is where many can stumble when integrating the IIoT into their operations. For one thing, many businesses still use legacy systems and processes. Many of these have been in operation for decades and thus remain unaltered, thereby complicating the adoption of new technologies.

Also, the proliferation of smart devices has given rise to security vulnerabilities and the concern of security accountability. IIoT adopters have the de facto responsibility of securing the setup and use of their connected devices, but device manufacturers have the obligation of protecting their consumers when they roll out their products. Manufacturers should be able to ensure the security of the users and provide preventive measures or remediation when security issues arise.

Even more, the need for cybersecurity is brought to the fore as more significant security incidents surface over the years. Hackers gaining access to connected systems do not only mean exposing the business to a major breach, but also mean potentially subjecting operations to a shutdown. To a certain extent, industries and enterprises adopting the IIoT have to plan and operate like technology companies in order to manage both physical and digital components securely.

Which industries are using IIoT?

Numerous industries use IIoT, including the following:

- The automotive industry. This industry uses industrial robots, and IIoT can help proactively maintain these systems and spot potential problems before they can disrupt production. The automotive industry also uses IIoT devices to collect data from customer systems, sending it to the company's systems. That data is then used to identify potential maintenance issues.

- The agriculture industry. Industrial sensors collect data about soil nutrients, moisture and other variables, enabling farmers to produce an optimal crop.

- The oil and gas industry. Some oil companies maintain a fleet of autonomous aircraft that use visual and thermal imaging to detect potential problems in pipelines. This information is combined with data from other types of sensors to ensure safe operations.

- Utilities. IIoT is used in electric, water and gas metering, as well as for the remote monitoring of industrial utilities equipment such as transformers.

What are the benefits of IIoT?

IIoT devices used in the manufacturing industry offer the following benefits:

- Predictive maintenance. Organizations can use real-time data generated from IIoT systems to predict when a machine needs to be serviced. That way, the necessary maintenance can be performed before a failure occurs. This can be especially beneficial on a production line, where the failure of a machine might result in a work stoppage and huge costs. By proactively addressing maintenance issues, an organization can achieve better operational efficiency.

- More efficient field service. IIoT technologies help field service technicians identify potential issues in customer equipment before they become major issues, enabling techs to fix the problems before they affect customers. These technologies also provide field service technicians with information about which parts they need to make a repair. This ensures technicians have the necessary parts with them when making a service call.

- Asset tracking. Suppliers, manufacturers and customers can use asset management systems to track the location, status and condition of products throughout the supply chain. The system sends instant alerts to stakeholders if the goods are damaged or at risk of being damaged, giving them a chance to take immediate or preventive action to remedy the situation.

- Increased customer satisfaction. When products are connected to IoT, the manufacturer can capture and analyze data about how customers use their products, enabling manufacturers and product designers to build more customer-centric product roadmaps.

- Improved facility management. Manufacturing equipment is susceptible to wear and tear, which can be exacerbated by certain conditions in a factory. Sensors can monitor vibrations, temperature and other factors that could lead to suboptimal operating conditions.

Tuesday, 13 August 2024

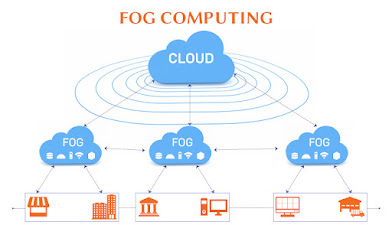

Fog Computing

- Device-level Fog Computing: Device-level fog computing utilizes low-power technology, including sensors, switches, and routers. It can be used to collect data from these devices and upload it to the cloud for analysis.

- Edge-level Fog Computing: Edge-level fog computing utilizes network-connected servers or appliances. These devices can be used to process data before it is uploaded to the cloud.

- Gateway-level Fog Computing: Fog computing at the gateway level uses devices to connect the edge to the cloud. These devices can be used to control traffic and send only relevant data to the cloud.

- Cloud-level Fog Computing: Cloud-level fog computing uses cloud-based servers or appliances. These devices can be used to process data before it is sent to end users.

- Bandwidth conservation. Fog computing reduces the volume of data that is sent to the cloud, thereby reducing bandwidth consumption and related costs.

- Improved response time. Because the initial data processing occurs near the data, latency is reduced, and overall responsiveness is improved. The goal is to provide millisecond-level responsiveness, enabling data to be processed in near-real time.

- Network-agnostic. Although fog computing generally places compute resources at the LAN level as opposed to the device level, which is the case with edge computing, the network could be considered part of the fog computing architecture. At the same time, though, fog computing is network-agnostic in the sense that the network can be wired, Wi-Fi or even 5G.

- Physical location. Because fog computing is tied to a physical location, it undermines some of the "anytime/anywhere" benefits associated with cloud computing.

- Potential security issues. Under the right circumstances, fog computing can be subject to security issues, such as Internet Protocol (IP) address spoofing or man in the middle (MitM) attacks.

- Startup costs. Fog computing is a solution that utilizes both edge and cloud resources, which means that there are associated hardware costs.

- Ambiguous concept. Even though fog computing has been around for several years, there is still some ambiguity around the definition of fog computing with various vendors defining fog computing differently.

Sunday, 11 August 2024

Decentralized Finance (DeFi)

Decentralized finance, also known as DeFi, uses cryptocurrency and blockchain technology to manage financial transactions.

DeFi aims to democratize finance by replacing legacy, centralized institutions with peer-to-peer relationships that can provide a full spectrum of financial services, from everyday banking, loans and mortgages, to complicated contractual relationships and asset trading.

How Decentralized Finance (DeFi) Works

Through peer-to-peer financial networks, DeFi uses security protocols, connectivity, software, and hardware advancements. This system eliminates intermediaries like banks and other financial service companies. These companies charge businesses and customers for using their services, which are necessary in the current system because it's the only way to make it work. DeFi uses blockchain technology to reduce the need for these intermediaries.

How DeFi is Being Used Now

DeFI is making its way into a wide variety of simple and complex financial transactions. It’s powered by decentralized apps called “dapps,” or other programs called “protocols.” Dapps and protocols handle transactions in the two main cryptocurrencies, Bitcoin (BTC) and Ethereum (ETH).

While Bitcoin is the more popular cryptocurrency, Ethereum is much more adaptable to a wider variety of uses, meaning much of the dapp and protocol landscape uses Ethereum-based code.

Here are some of the ways dapps and protocols are already being used:

- Traditional financial transactions. Anything from payments, trading securities and insurance, to lending and borrowing are already happening with DeFi.

- Decentralized exchanges (DEXs). Right now, most cryptocurrency investors use centralized exchanges like Coinbase. DEXs facilitate peer-to-peer financial transactions and let users retain control over their money.

- E-wallets. DeFi developers are creating digital wallets that can operate independently of the largest cryptocurrency exchanges and give investors access to everything from cryptocurrency to blockchain-based games.

- Stable coins. While cryptocurrencies are notoriously volatile, stable coins attempt to stabilize their values by tying them to non-cryptocurrencies, like the U.S. dollar.

- Yield harvesting. Dubbed the “rocket fuel” of crypto, DeFi makes it possible for speculative investors to lend crypto and potentially reap big rewards when the proprietary coins DeFi borrowing platforms pay them for agreeing to the loan appreciate rapidly.

- Non-fungible tokens (NFTs). NFTs create digital assets out of typically non-tradable assets, like videos of slam dunks or the first tweet on Twitter. NFTs commodify the previously uncommodifiable.

- Flash loans. These are cryptocurrency loans that borrow and repay funds in the same transaction. Sound counterintuitive? Here’s how it works: Borrowers have the potential to make money by entering into a contract encoded on the Ethereum blockchain—no lawyers needed—that borrows funds, executes a transaction and repays the loan instantly. If the transaction can’t be executed, or it’ll be at a loss, the funds automatically go back to the loaner. If you do make a profit, you can pocket it, minus any interest charges or fees. Think of flash loans as decentralized arbitrage.

What are the key benefits and risks to DeFi users?

Overall, DeFi offers users more control over their money. Financial assets can be transferred or purchased in a matter of seconds or minutes. Service fees would largely be abolished, as there would be no third-party companies assisting with transactions. Your money would be converted to a “fiat-backed stablecoin” and made accessible via digital wallet so you wouldn’t have to deposit funds into a bank. And because bank accounts will no longer be necessary, almost anyone with an Internet connection can have access to the same financial goods and services.

The biggest risk is that DeFi is unregulated. There is no FDIC backing (nor that of any other regulatory entity) to protect your funds should a major glitch, error, or cyber hack make your funds unavailable or cause them to disappear.

Also, the technology is so new that there’s no unified or comprehensive way to determine whether any part of a DeFi system is operating at optimal capacity or is free from scams. In theory, each technological component in a DeFi ecosystem should operate in a fast, efficient, and secure manner. In practice, however, it’s still untested.

How to Get Involved with DeFi

Get a Crypto Wallet

“Start by setting up an Ethereum wallet like Metamask, then funding it with Ethereum,” says Cosman. “Self-custody wallets are your ticket to the world of DeFi, but make sure to save your public and private key. Lose these, and you won’t be able to get back into your wallet.”

Trade Digital Assets.

“I recommend trading a small amount of two assets on a decentralized exchange such as Uniswap,” says Doug Schwenk, chairman of Digital Asset Research. “Trying this exercise will help a crypto enthusiast understand the current landscape, but be prepared to lose everything while you’re learning which assets and platforms are best and how to manage risks.”

Look into Stablecoins

“An exciting way to try out DeFi without exposing oneself to the price swings of an underlying asset is to try out TrueFi, which offers competitive returns on stablecoins (AKA dollar-backed tokens, which aren’t subject to price movements),” Cosman says.

Wednesday, 7 August 2024

Smart Contracts

A Smart Contract (or cryptocontract) is a computer program that directly and automatically controls the transfer of digital assets between the parties under certain conditions. A smart contract works in the same way as a traditional contract while also automatically enforcing the contract. Smart contracts are programs that execute exactly as they are set up(coded, programmed) by their creators. Just like a traditional contract is enforceable by law, smart contracts are enforceable by code.

- The bitcoin network was the first to use some sort of smart contract by using them to transfer value from one person to another.

- The smart contract involved employs basic conditions like checking if the amount of value to transfer is actually available in the sender account.

- Later, the Ethereum platform emerged which was considered more powerful, precisely because the developers/programmers could make custom contracts in a Turing-complete language.

- It is to be noted that the contracts written in the case of the bitcoin network were written in a Turing-incomplete language, restricting the potential of smart contracts implementation in the bitcoin network.

- There are some common smart contract platforms like Ethereum, Solana, Polkadot, Hyperledger fabric, etc.

How smart contracts work

Smart contracts work by following simple “if/when…then…” statements that are written into code on a blockchain. A network of computers executes the actions when predetermined conditions are met and verified.

These actions might include releasing funds to the appropriate parties, registering a vehicle, sending notifications or issuing a ticket. The blockchain is then updated when the transaction is completed. That means the transaction cannot be changed, and only parties who have been granted permission can see the results.

Within a smart contract, there can be as many stipulations as needed to satisfy the participants that the task will be completed satisfactorily. To establish the terms, participants must determine how transactions and their data are represented on the blockchain, agree on the “if/when...then…” rules that govern those transactions, explore all possible exceptions and define a framework for resolving disputes.

Then, the smart contract can be programmed by a developer–although increasingly, organizations that use blockchain for business provide templates, web interfaces and other online tools to simplify structuring smart contracts.

Types of Smart Contracts

1. Smart Legal Contract:

There are legal guarantees for smart contracts. They follow the format seen in contracts: “If this occurs, then this will occur.” Legal smart contracts provide more openness between contracting entities than traditional documents because they are stored on blockchain and cannot be altered. Contracts are executed by the parties using digital signatures. If certain conditions are met, such as paying a debt when a predetermined date is reached, smart legal contracts may operate on their own. If stakeholders don’t comply, there may be serious legal ramifications.

2. Decentralized Autonomous Organizations (DAOs):

DAOs are democratic organisations with voting powers granted by a smart contract. A decentralised autonomous organisation, or DAO, is a blockchain-based entity with a shared goal under collective governance. There is no such thing as an executive or president. Instead, the organization’s operations and the distribution of assets are governed by blockchain-based principles that are incorporated into the contract’s code. One example of this kind of smart contract is VitaDAO, which uses technology to power a community dedicated to scientific inquiry.

3. Application Logic Contracts:

Application-based code that usually keeps up with multiple other blockchain contracts makes up application logic contracts, or ALCs. It permits device-to-device interactions such as blockchain integration and the Internet of Things. These are signed between computers and other contracts rather than between people or organisations like other kinds of smart contracts.

Features of Smart Contracts

- Distributed: Everyone on the network is guaranteed to have a copy of all the conditions of the smart contract and they cannot be changed by one of the parties. A smart contract is replicated and distributed by all the nodes connected to the network.

- Deterministic: Smart contracts can only perform functions for which they are designed only when the required conditions are met. The final outcome will not vary, no matter who executes the smart contract.

- Immutable: Once deployed smart contract cannot be changed, it can only be removed as long as the functionality is implemented previously.

- Autonomy: There is no third party involved. The contract is made by you and shared between the parties. No intermediaries are involved which minimizes bullying and grants full authority to the dealing parties. Also, the smart contract is maintained and executed by all the nodes on the network, thus removing all the controlling power from any one party’s hand.

- Customizable: Smart contracts have the ability for modification or we can say customization before being launched to do what the user wants it to do.

- Transparent: Smart contracts are always stored on a public distributed ledger called blockchain due to which the code is visible to everyone, whether or not they are participants in the smart contract.

- Trustless: These are not required by third parties to verify the integrity of the process or to check whether the required conditions are met.

- Self-verifying: These are self-verifying due to automated possibilities.

- Self-enforcing: These are self-enforcing when the conditions and rules are met at all stages.

Capabilities of Smart Contracts

- Accuracy: Smart contracts are accurate to the limit a programmer has accurately coded them for execution.

- Automation: Smart contracts can automate the tasks/ processes that are done manually.

- Speed: Smart contracts use software code to automate tasks, thereby reducing the time it takes to maneuver through all the human interaction-related processes. Because everything is coded, the time taken to do all the work is the time taken for the code in the smart contract to execute.

- Backup: Every node in the blockchain maintains the shared ledger, providing probably the best backup facility.

- Security: Cryptography can make sure that the assets are safe and sound. Even if someone breaks the encryption, the hacker will have to modify all the blocks that come after the block which has been modified. Please note that this is a highly difficult and computation-intensive task and is practically impossible for a small or medium-sized organization to do.

- Savings: Smart contracts save money as they eliminate the presence of intermediaries in the process. Also, the money spent on the paperwork is minimal to zero.

- Manages information: Smart contract manages users’ agreement, and stores information about an application like domain registration, membership records, etc.

- Multi-signature accounts: Smart contracts support multi-signature accounts to distribute funds as soon as all the parties involved confirm the agreement.

Monday, 5 August 2024

Cryptocurrency

Cryptocurrency, sometimes called crypto-currency or crypto, is any form of currency that exists digitally or virtually and uses cryptography to secure transactions. Cryptocurrencies don't have a central issuing or regulating authority, instead using a decentralized system to record transactions and issue new units.

Cryptocurrency is a digital payment system that doesn't rely on banks to verify transactions. It’s a peer-to-peer system that can enable anyone anywhere to send and receive payments. Instead of being physical money carried around and exchanged in the real world, cryptocurrency payments exist purely as digital entries to an online database describing specific transactions. When you transfer cryptocurrency funds, the transactions are recorded in a public ledger. Cryptocurrency is stored in digital wallets.

Cryptocurrency received its name because it uses encryption to verify transactions. This means advanced coding is involved in storing and transmitting cryptocurrency data between wallets and to public ledgers. The aim of encryption is to provide security and safety.

The first cryptocurrency was Bitcoin, which was founded in 2009 and remains the best known today. Much of the interest in cryptocurrencies is to trade for profit, with speculators at times driving prices skyward.

Types of Cryptocurrency

- Utility: XRP and ETH are two examples of utility tokens. They serve specific functions on their respective blockchains.

- Transactional: Tokens designed to be used as a payment method. Bitcoin is the most well-known of these.

- Governance: These tokens represent voting or other rights on a blockchain, such as Uniswap.

- Platform: These tokens support applications built to use a blockchain, such as Solana.

- Security tokens: Tokens representing ownership of an asset, such as a stock that has been tokenized (value transferred to the blockchain). MS Token is an example of a securitized token. If you can find one of these for sale, you can gain partial ownership of the Millennium Sapphire.

Cryptocurrency examples

There are thousands of cryptocurrencies. Some of the best known include:

Bitcoin:

Founded in 2009, Bitcoin was the first cryptocurrency and is still the most commonly traded. The currency was developed by Satoshi Nakamoto – widely believed to be a pseudonym for an individual or group of people whose precise identity remains unknown.

Ethereum:

Developed in 2015, Ethereum is a blockchain platform with its own cryptocurrency, called Ether (ETH) or Ethereum. It is the most popular cryptocurrency after Bitcoin.

Litecoin:

This currency is most similar to bitcoin but has moved more quickly to develop new innovations, including faster payments and processes to allow more transactions.

Ripple:

Ripple is a distributed ledger system that was founded in 2012. Ripple can be used to track different kinds of transactions, not just cryptocurrency. The company behind it has worked with various banks and financial institutions.

Non-Bitcoin cryptocurrencies are collectively known as “altcoins” to distinguish them from the original.

Is Cryptocurrency a Safe Investment?

- User risk: Unlike traditional finance, there is no way to reverse or cancel a cryptocurrency transaction after it has already been sent. By some estimates, about one-fifth of all bitcoins are now inaccessible due to lost passwords or incorrect sending addresses.

- Regulatory risks: The regulatory status of some cryptocurrencies is still unclear in many areas, with some governments seeking to regulate them as securities, currencies, or both. A sudden regulatory crackdown could make it challenging to sell cryptocurrencies or cause a market-wide price drop.

- Counterparty risks: Many investors and merchants rely on exchanges or other custodians to store their cryptocurrency. Theft or loss by one of these third parties could result in losing one's entire investment.

- Management risks: Due to the lack of coherent regulations, there are few protections against deceptive or unethical management practices. Many investors have lost large sums to management teams that failed to deliver a product.

- Programming risks: Many investment and lending platforms use automated smart contracts to control the movement of user deposits. An investor using one of these platforms assumes the risk that a bug or exploit in these programs could cause them to lose their investment.

- Market Manipulation: Market manipulation remains a substantial problem in cryptocurrency, with influential people, organizations, and exchanges acting unethically

Cryptocurrency fraud and cryptocurrency scams

Unfortunately, cryptocurrency crime is on the rise. Cryptocurrency scams include:

- Fake websites: Bogus sites which feature fake testimonials and crypto jargon promising massive, guaranteed returns, provided you keep investing.

- Virtual Ponzi schemes: Cryptocurrency criminals promote non-existent opportunities to invest in digital currencies and create the illusion of huge returns by paying off old investors with new investors’ money. One scam operation, BitClub Network, raised more than $700 million before its perpetrators were indicted in December 2019.

- "Celebrity" endorsements: Scammers pose online as billionaires or well-known names who promise to multiply your investment in a virtual currency but instead steal what you send. They may also use messaging apps or chat rooms to start rumours that a famous businessperson is backing a specific cryptocurrency. Once they have encouraged investors to buy and driven up the price, the scammers sell their stake, and the currency reduces in value.

- Romance scams: The FBI warns of a trend in online dating scams, where tricksters persuade people they meet on dating apps or social media to invest or trade in virtual currencies. The FBI’s Internet Crime Complaint Centre fielded more than 1,800 reports of crypto-focused romance scams in the first seven months of 2021, with losses reaching $133 million.

Saturday, 3 August 2024

Zero-Trust Security

- Terminate every connection: Technologies like firewalls use a “passthrough” approach, inspecting files as they are delivered. If a malicious file is detected, alerts are often too late. An effective zero trust solution terminates every connection to allow an inline proxy architecture to inspect all traffic, including encrypted traffic, in real time—before it reaches its destination—to prevent ransomware, malware, and more.

- Protect data using granular context-based policies: Zero trust policies verify access requests and rights based on context, including user identity, device, location, type of content, and the application being requested. Policies are adaptive, so user access privileges are continually reassessed as context changes.

- Reduce risk by eliminating the attack surface: With a zero trust approach, users connect directly to the apps and resources they need, never to networks (see ZTNA). Direct user-to-app and app-to-app connections eliminate the risk of lateral movement and prevent compromised devices from infecting other resources. Plus, users and apps are invisible to the internet, so they can’t be discovered or attacked.

- The underlying architecture: Traditional models used approved IP addresses, ports, protocols for access controls and remote access VPN for trust validation.

- An inline approach: This considers all traffic as potentially hostile, even that within the network perimeter. Traffic is blocked until validated by specific attributes such as a fingerprint or identity.

- Context-aware policies: This stronger security approach remains with the workload regardless of where it communicates—be it a public cloud, hybrid environment, container, or an on-premises network architecture.

- Multifactor authentication: Validation is based on user, identity, device, and location.

- Environment-agnostic security: Protection applies regardless of communication environment, promoting secure cross-network communications without need for architectural changes or policy updates.

- Business-oriented connectivity: A zero trust model uses business policies for connecting users, devices, and applications securely across any network, facilitating secure digital transformation.

Digital Privacy

Digital privacy is the ability of an individual to control and protect the access and use of their personal information as and when they ac...

-

The Internet is a network of networks and Autonomous Systems are the big networks that make up the Internet. More specifically, an autonomo...

-

Financial technology (better known as fintech ) is used to describe new technology that seeks to improve and automate the delivery and use ...

-

A smart home is equipped with internet connected devices that allow the home's security features, appliances, climate controls and more...

.png)